How to deliver a secure, scalable, high-performance virtual desktop environment

OVH and wokati Technologies

Управляющее резюме

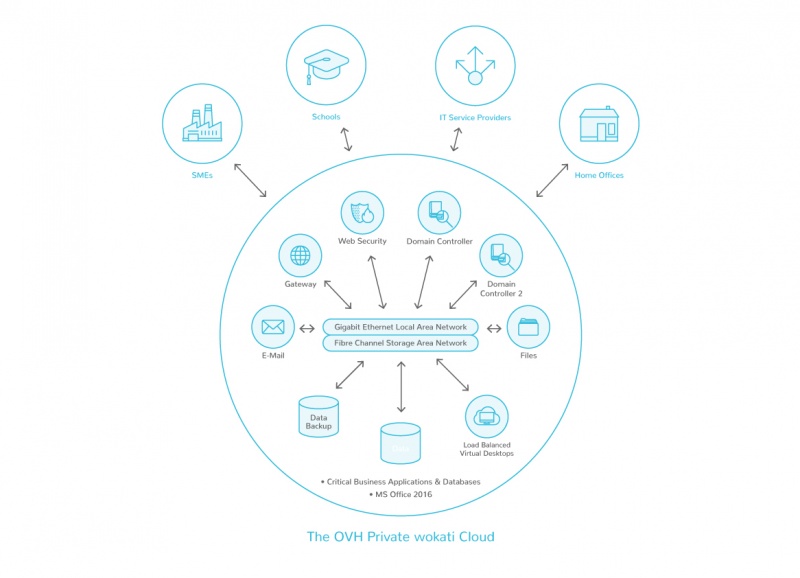

wokati Technologies предлагает быстрые, гибкие и безопасные решения для облачных вычислений для организаций на всех уровнях. Они достигают этого благодаря wokatiBox Cloud PC — 2-дюймовому настольному кубу с питанием от облака, что обеспечивает пользователям легкий доступ к лучшему в своем классе виртуальному рабочему столу. Пользователи зависят от скорости развертывания системы, надежной защиты данных и производительность, а также ее возможности для быстрой и рентабельной масштабируемости по мере развития требований. В результате технологии wokati Technologies имеют очень специфические требования, когда речь идет о выборе партнеров, с помощью которых разрабатываются и поддерживаются их собственные инфраструктуры.

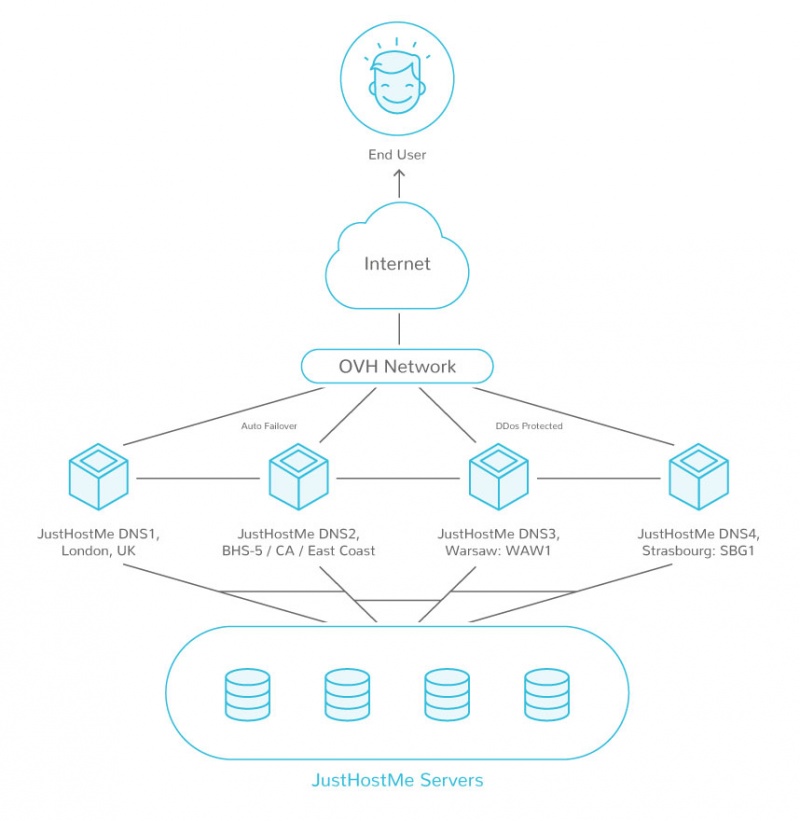

С этой целью wokati Technologies выбрали OVH в качестве партнера по центрам обработки данных, чтобы обеспечить производительность, которую ожидают их пользователи. Их виртуальные настольные сервисы теперь размещаются исключительно в OVH Private Cloud, в глобальной инфраструктуре центра обработки данных, с избыточными элементами и комплексными соглашениями об уровне обслуживания, которые помогают гарантировать качество обслуживания мирового уровня в любое время.

Я очень доволен нашим решением принять OVH в качестве нашего основного глобального центра обработки данных и сетевой инфраструктуры. Наши первоначальные проекты обеспечили немедленные, измеримые преимущества для нашего основного бизнеса в то время, когда мы быстро выходим на рынок. Это служит хорошим предзнаменованием для будущегоАшока Редди — основатель и генеральный директор wokati Technologies UK

Соревнование

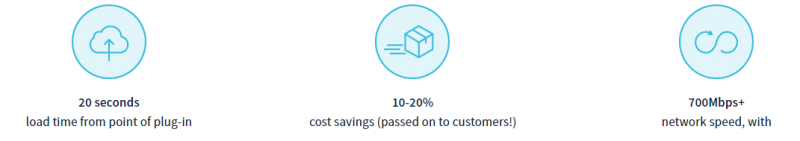

Скорость, безопасность, пространство и простота — с точки зрения производительности, развертывания и разработки — имеют жизненно важное значение в современном цифровом ландшафте, а система wokatiBox Cloud PC предназначена для решения этих задач со средним временем загрузки приложения 20 секунд. Это означает, что новые виртуальные машины должны быть готовы к развертыванию и использованию в любой момент, поэтому пользователи могут быстро повысить свою инфраструктуру, добавив новые виртуальные машины одним щелчком мыши. Гибкость — как с точки зрения выбора аппаратного обеспечения, так и конфигурации технологии виртуализации — была жизненно важной для долгосрочных планов wokati Technologies.

Кроме того, пользователи wokati Technologies ожидают полную согласованность производительности и безопасности данных в любое время, с возможностью поглощения пиковых нагрузок и без неожиданного простоя при сбоях системы или сбоях оборудования. Поэтому гарантированные SLA необходимы для обеспечения безопасности и качества обслуживания и обеспечения полного спокойствия пользователей по мере развития их инфраструктур.

Многие компании вкладывают средства в большие серверы, на которых они могут расти (например, носить мешковатые брюки, когда мы дети!), Но большинство компьютеров работают с пропускной способностью до 30%, а по размеру хранилища они, вероятно, используют только небольшой процент, люди платят за пустое пространство, но с OVH и виртуализацией они не нуждаются в этом, потому что мы заполняем серверы до макс. И благодаря виртуализации у нас есть неотъемлемо зеленая технология, так как она много стоит для работы центра обработки данныхАшока Редди, основатель и генеральный директор wokati Technologies UK

Решение

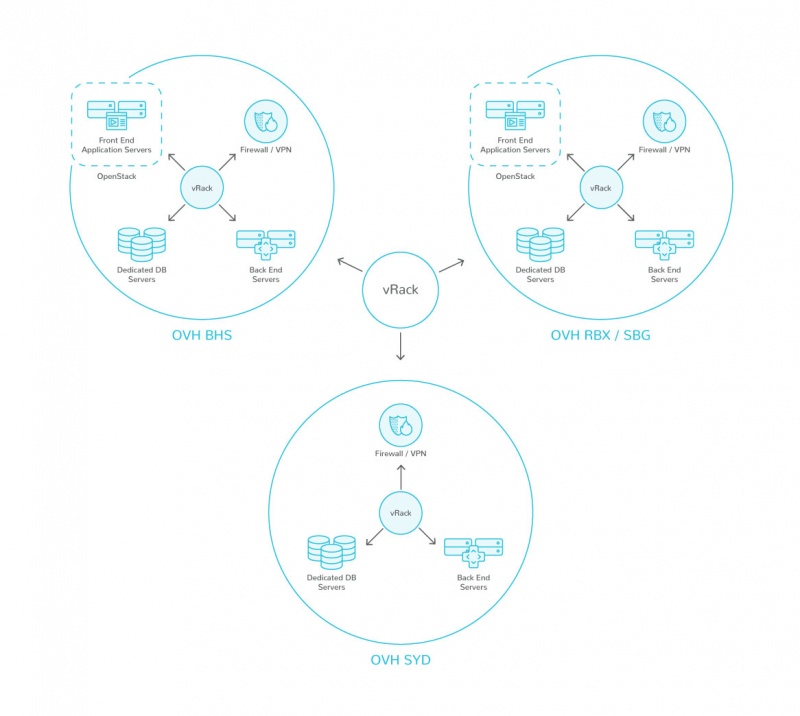

Инфраструктура виртуального рабочего стола wokati «Технологии» была создана полностью в частном облаке OVH и размещена в центрах обработки данных Roubaix во Франции. Это последовало за интенсивными консультациями между wokati Technologies и командами OVH, чтобы спроектировать и предоставить инфраструктуру, которая сможет удовлетворить (и постоянно превышать) потребности конечных пользователей, как сейчас, так и в будущем. Ключевой частью этого было использование VMware vSphere Hypervisor для быстрого развертывания и настройки новых виртуальных машин, где и когда они были необходимы.

Это решение не только отвечало строгим требованиям wokati Technologies в отношении производительности, но и сокращало время подачи заявок на wokatiBox с одной минуты до 20 секунд с точки плагина, но это сделало это, достигнув экономии 10-20% по сравнению с инфраструктура общедоступных облаков. Кроме того, тесты скорости сети показали, что скорости более 700 Мбит / с легко достижимы как для загрузки, так и для загрузки, с задержкой менее 10 мс.

Использование европейского центра обработки данных оказалось важным преимуществом для технологий wokati, позволяя им получить доступ к поддержке во временной зоне и тесно сотрудничать с экспертами OVH, чтобы гарантировать, что их выбранное оборудование окажется подходящим для цели. Это включало в себя выбор точных спецификаций используемых серверов, а затем их интенсивный период тестирования для обеспечения того, чтобы они могли обрабатывать ожидаемые пиковые нагрузки, а также иметь возможность для будущего масштабирования. Это помогло развить глубокие знания wokati о том, как наиболее эффективно их виртуальные машины могут быть развернуты, чтобы они могли сосредоточиться на мониторинге и повышении производительности, как только они вышли вживую. В рамках этого процесса разработки избыточные элементы были включены там, где это необходимо, чтобы обеспечить бесперебойную работу системы даже в случае неожиданного.

Во время развертывания инфраструктуры приватного облака wokati Technologies гарантированные SLA были согласованы и введены в действие, чтобы гарантировать, что производительность будет оставаться неизменной, независимо от каких-либо изменений обстоятельств, за исключением «богов». Хорошо зарекомендовавшая себя сеть OVH обеспечила, чтобы установление этих соглашений об уровне обслуживания было относительно простым и не предусматривало каких-либо дополнительных затрат, поскольку необходимые меры уже были встроены в инфраструктуру.

Как до, так и после развертывания образовательные ресурсы по виртуализации и облачным вычислениям, предоставляемые OVH и VMware, оказались чрезвычайно полезными для команды wokati. Эти материалы помогли им максимально использовать ресурсы OVH и эффективно реагировать на запросы клиентов. Это включало онлайн-гиды и видеоролики, а также регулярное обучение Private Cloud, предлагаемое во время OVH Academies, в наших офисах в Лондоне.

Результат

Успешное партнерство между wokati Technologies и OVH уже приближается к следующему этапу его развития, при этом эксперты обеих компаний сотрудничают для определения областей, где их инфраструктура может быть развита еще дальше. В частности, запуск флагманского центра данных OVH в Великобритании открыла возможность расширить сеть wokati на обоих центрах обработки данных, с обширными мерами по восстановлению после стихийных бедствий. Реализация брандмауэра NSX также запланирована на ближайшее будущее, чтобы еще больше повысить безопасность данных клиентов и уровень контроля и гибкости вокати.

Прямой контакт с топ-менеджерами OVH предоставляет wokati Technologies возможность влиять на долгосрочную технологическую карту. В соответствии с этим духом сотрудничества специальная, доступная команда OVH-аккаунтов находится в постоянном контакте с собственными командами wokati для обзора прогресса и выявления новых возможностей.

Обсуждаются вопросы разработки графических процессоров в рамках Private Cloud для лучшего удовлетворения потребностей ведущей архитектурной фирмы, использующей возможности wokati. Партнерство между wokati Technologies и OVH обеспечило идеальную основу для продвижения таких инноваций.

Важно то, что отношения с OVH — наше техническое партнерство на высоком уровне — потому что мы собираемся сделать пожизненную приверженность сейчас. В этой связи я вижу, что наш рост является совокупным ростомАшока Редди, основатель и генеральный директор wokati Technologies UK