OVH развернула, эксплуатировала и поддерживала собственную волоконно-оптическую сеть с 2006 года. Она объединяет 32 точки присутствия (PoP) по всему миру. Как эта инфраструктура приносит пользу нашим клиентам? Почему мы должны постоянно развивать его? А что означает «пропускная способность сети»? Читай дальше что бы узнать.

Что означает «пропускная способность сети»?

Что означает «пропускная способность сети»?

Все наши 28 центров обработки данных подключены к Интернету через магистраль ОВЧ. Под этим мы подразумеваем нашу собственную сеть, управляемую нашими собственными командами. Эта сеть охватывает 32 точки присутствия (PoP), которые связаны друг с другом через волоконно-оптические кабели.

Каждый PoP действует как пересечение шоссе между OVH и другими провайдерами. К ним могут относиться провайдеры интернет-услуг, облачные провайдеры и операторы связи. Когда мы говорим о пропускной способности сети, мы имеем в виду общую емкость всех этих «пересечений». И это то, что достигло 15 Тбит / с.

Наличие нашей собственной сети является основным преимуществом в нашем бизнесе. Это дает нам гораздо больший контроль над качеством, поскольку мы максимально приближены к нашим клиентам и их конечным пользователям. Напротив, большинство наших конкурентов полагаются на сторонний «транзитный» оператор. В результате они имеют меньший контроль над качеством.

Зачем нам нужны такие огромные мощности?

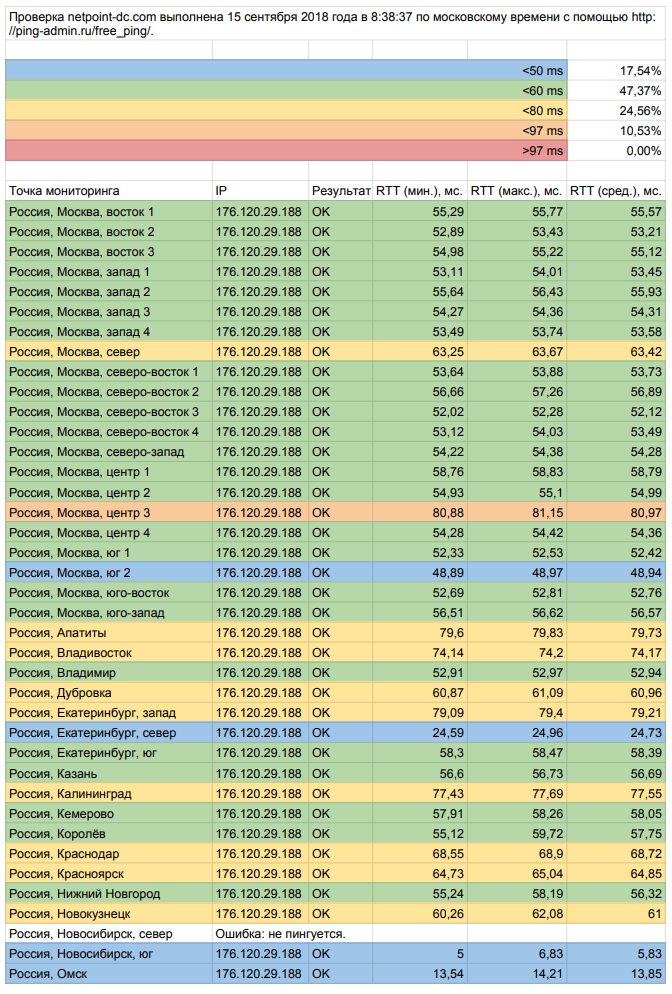

Управляя нашей основой, мы можем предложить нашим клиентам высококачественную пропускную способность и низкий уровень задержек в любой точке мира. Это также помогает нам защищать пользователей от атак типа «отказ в обслуживании» (DDoS).

Фактически, одной из причин, по которой OVH требуется много избыточной мощности, является поглощение всплесков высокоинтенсивных атак. Потому что речь идет не только о том, как справляться с атаками, но и о возможности противостоять им. Из наших данных за 2017 год видно, что атаки DDoS продолжают увеличиваться в объеме.

Как поставщик облачных сервисов, мы, естественно, имеем намного больше исходящего трафика, чем входящий трафик. И он продолжает расти примерно на 36% в год. Тем не менее, нам по-прежнему важно увеличить пропускную способность входящего трафика, если только для того, чтобы избежать перегруженности, вызванной DDoS-атаками. Таким образом мы можем управлять и фильтровать атаки с помощью нашей системы DDoS VAC без законного трафика, испытывающего любые проблемы с насыщением.

Избыточная емкость также увеличивает доступность. Если, например, у нас есть инцидент в одном из наших PoP, или один из наших партнеров (например, оператор или поставщик интернет-услуг) испытывает отключение волоконно-оптического кабеля, это не имеет значения. Мы все равно можем избежать любых перегрузок и поддерживать качество обслуживания с точки зрения низкой латентности. И мы можем это сделать, потому что мы тесно связаны с нашими партнерами через несколько PoP. Если мы подключаемся к одному и тому же оператору через два PoP, это означает, что мы не можем превышать 50% использования для каждого PoP. Если мы подключены через три PoP, это означает 33% каждого и так далее. В случае инцидента или сбоя основная трудность заключается в ожидании перенаправления трафика (другими словами, на который PoP).

Обзор вопросов модернизации сетей Азиатско-Тихоокеанского региона

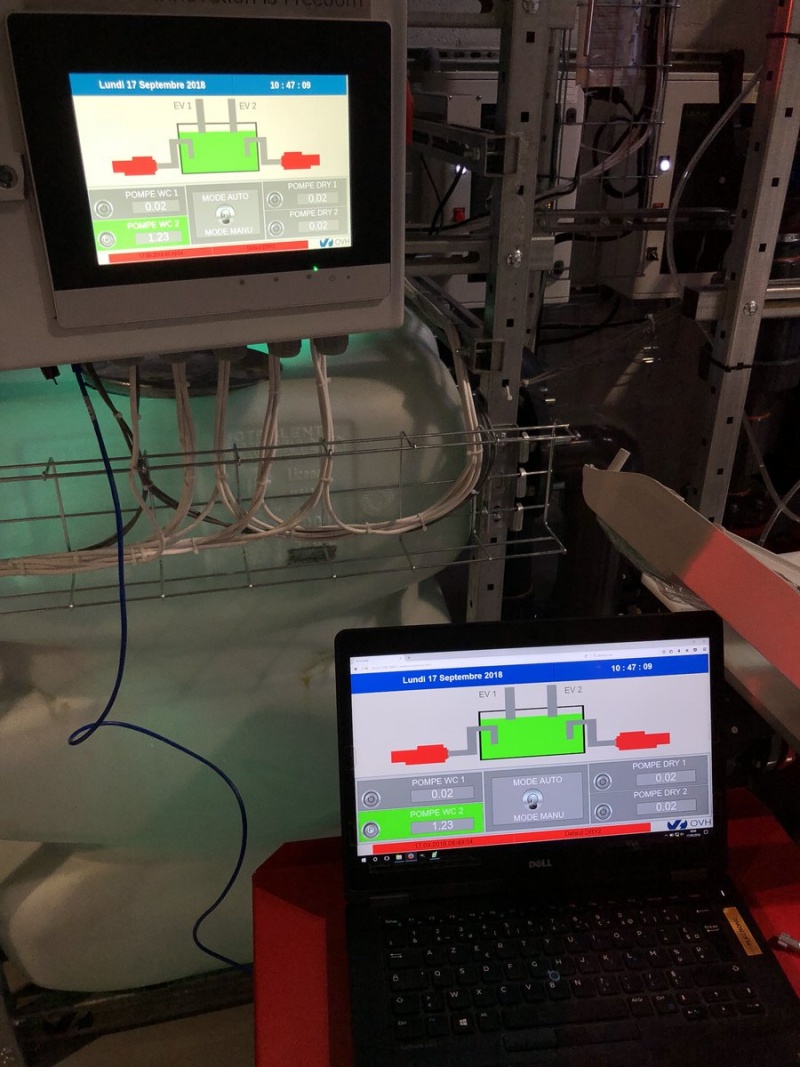

Наши недавние обновления сети в Азиатско-Тихоокеанском регионе действительно демонстрируют, как команды OVH могут вмешиваться на всех уровнях магистрали, улучшать доступность и защищать клиентов.

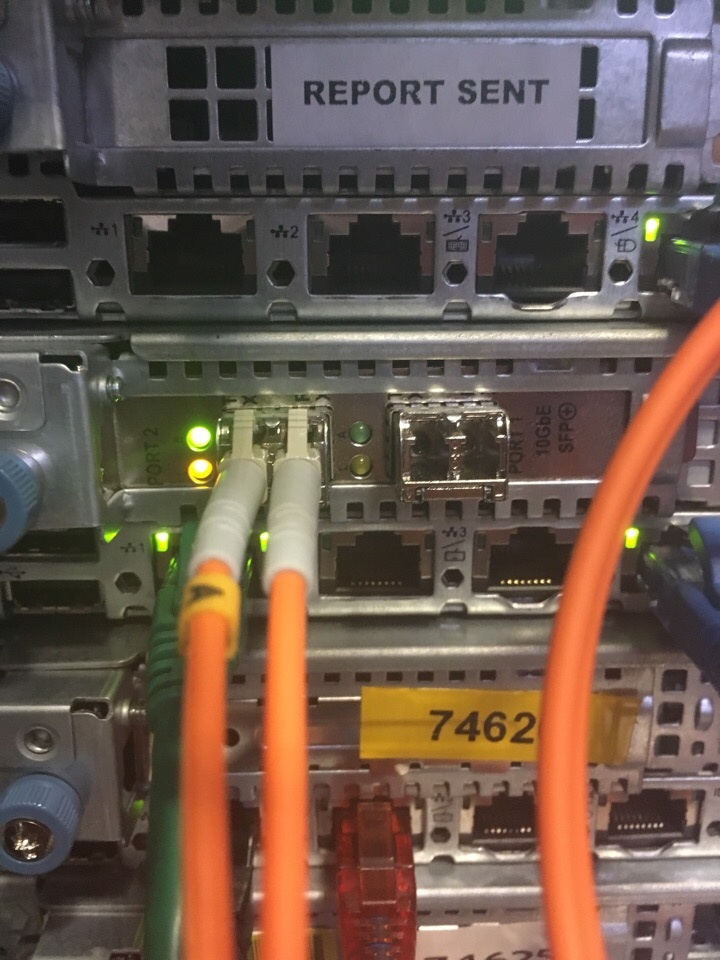

Новые базовые маршрутизаторы были добавлены в центры обработки данных в Сиднее и Сингапуре. Каждый маршрутизатор поддерживает до 4,8 Тбит / с пропускной способности.

Мы также установили четыре новых магистральных маршрутизатора (два в Сингапуре и два в Сиднее), которые подключены через 100 Гбит / с. Благодаря этим дополнениям OVH может подключаться к большему числу поставщиков, и в результате мы можем расширить наши возможности за пределами нашей собственной сети.

OVH также инвестировал в 100 Гбит / с ссылки для подключения к другим PoP. Увеличение нашей способности справляться с DDoS-атаками усилило безопасность нашей сети.

Наконец, мы смогли получить драгоценные миллисекунды, добавив новую связь с низкой задержкой между двумя центрами данных OVH в Азиатско-Тихоокеанском регионе (задержка в 88 мс вместо 144 мс) и изменение маршрутизации через Сингапур.